A well-crafted survey is pivotal in yielding valuable data for a B2B quantitative research study. But when designing a survey for maximum impact, it’s not just about answering key business questions and gathering high-quality respondents; it’s equally crucial to think through the types of questions you’re including and excluding in the survey.

Certain question types can introduce complexities that transform the data analysis phase into a daunting challenge. Failure to steer clear of these questions leaves researchers grappling with intricacies and ambiguities that can make the analysis phase longer than it needs to be. Also, in a worst-case scenario, these challenges make it difficult to discern a survey respondent’s true intent when they answered a given question.

Challenging Survey Questions to Avoid in B2B Quantitative Research

Here are just a few types of questions that can create challenges for market researchers and clients alike.

Double-Barreled Questions

Double-barreled questions are, in essence, two questions combined into one. At times this decision is made innocently and a researcher is initially unaware they have included a double-barreled question. Sometimes the temptation to shorten a survey, to allow for an additional question, on a different subject, can lead to the same mistake.

For example, if we asked respondents a double-barreled question about a “collaborative work management” tool, it might look something like, “Which of the following tools do you like the most and feel is the most user-friendly?”

At first glance, this question appears simple enough, and if a researcher asked it during a qualitative interview, it would be straightforward to ensure both parts of the question receive a complete answer. Yet, this kind of easy back and forth doesn’t happen in quantitative research. Additionally, this question assumes that user-friendliness is correlated with favorability, which is not guaranteed.

Instead, a researcher might start by asking, “Which of the following tools do you like the most?” Leading with a narrow question leaves room to expand on a respondent’s answer via more detailed questions such as, “What attributes do you associate with this tool?” or “Which tool do you feel is the most user-friendly?”

In short, focus on asking distinct questions and following up appropriately after each one.

Open-Ended Questions

These are questions that allow respondents to answer in their own words. While open-ended can provide rich, nuanced insights, this type of question type can be time-consuming and complex to assess, especially with large sample sizes. Coding responses, categorizing them into meaningful groups, and interpreting them can all be challenging.

Another challenge with open-ended questions is the rise of AI models like ChatGPT. Using these tools, respondents can swiftly generate seemingly genuine but inauthentic responses, particularly when motivated by incentives.

While open-ended questions are best avoided whenever possible, some concepts – like unaided awareness – can only be answered via open-ended questions. For example, “What are the top brands/companies that come to mind when you think about video conferencing software?” can only be answered in an open-ended format.

Verbatim comments about other topics are valuable and provide a lot of texture to a quant study. However, it’s important to always consider an open-ended question’s relevance to your research objectives and be sure to balance them with structured questions. Supplementing your survey with in-depth interviews or focus groups can help to provide a more comprehensive understanding of respondents’ thoughts and experiences.

Rating Scale Questions with Ambiguous Scales

Surveys using rating scales are a common practice. However, it’s essential to provide clear definitions for each point on the scale to ensure accurate interpretation. Ambiguity in scales, such as whether a 5 on a 1-to-5 scale indicates “excellence” or just “above average,” can lead to misinterpretation of results.

Scales that are overly granular without good explanation can cause even further confusion. For example, a scale that goes from 1 to 10 makes it harder for people to decide what is a 3 vs. 4 or 7 vs. 8.

Whenever a scale question is necessary to incorporate into your survey, be certain to provide clear, simple, and limited potential points along your scale. This will help ensure accurate analysis of results.

Grids/Matrix Questions

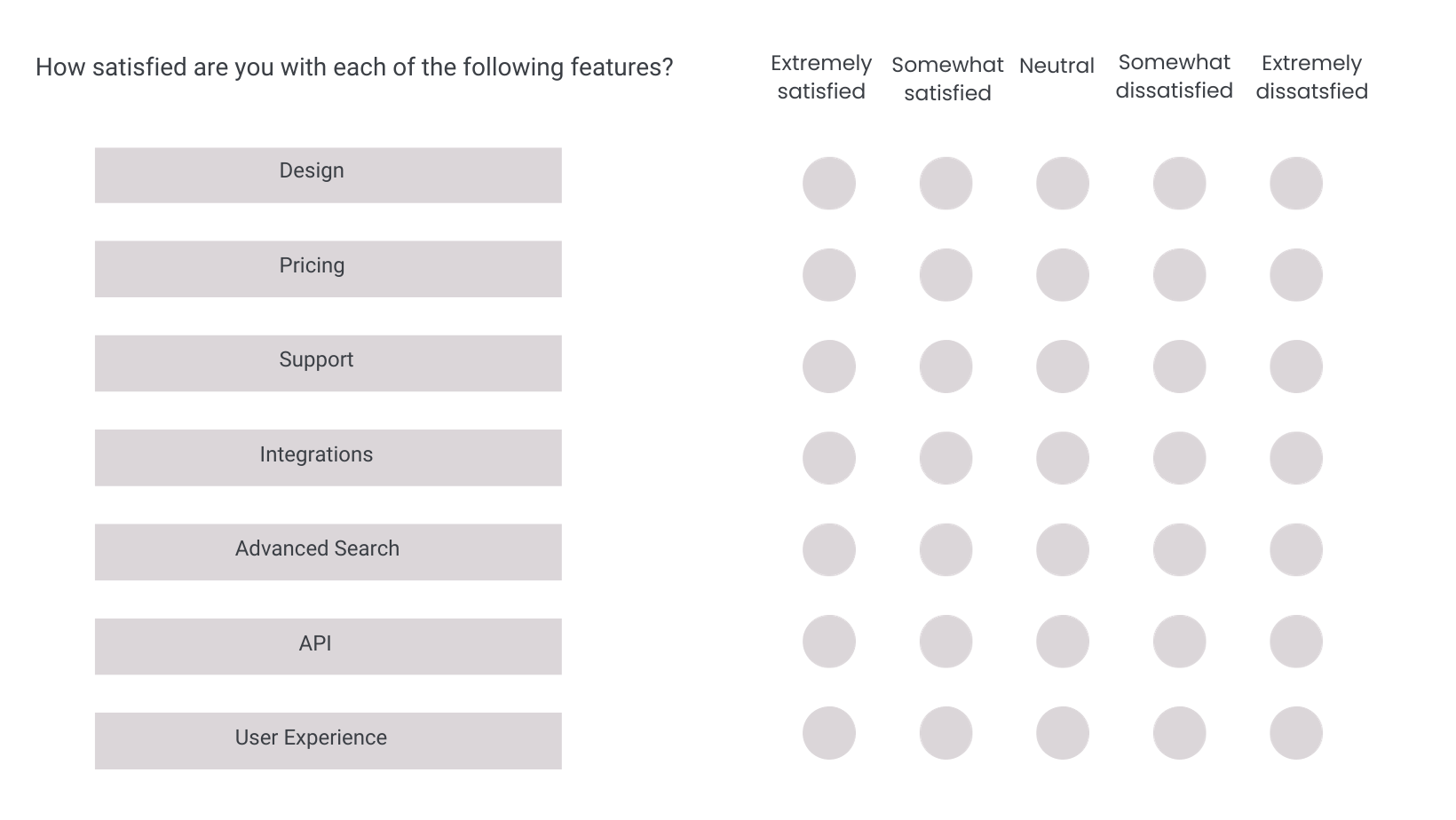

Grid or matrix questions are designed to simplify numerous multiple-choice inquiries into a more manageable format. An example may look something like this:

Despite providing a comprehensive view of the subject matter all at once, responding to these types of questions can be cumbersome and time-consuming for participants. The abundance of rows and columns may lead respondents to disengage, resorting to random answers to expedite completion. Moreover, matrix questions might not be suitable for mobile users, given the difficulty of displaying them effectively on smaller screens.

Matrix questions also present a significant challenge for researchers during the analysis phase. The potential abundance of incomplete answers due to disengaged participants leaves researchers with the daunting task of attempting to accurately organize, categorize, and analyze responses to matrix questions.

A straightforward solution to tackle these challenges is to pose the questions separately, known as the “item-by-item format.” Breaking down the matrix into its individual questions enhances predictive validity, likely because this approach demands more time from participants to answer each question.

Likert Scales

A Likert scale is a question that is a five-point or seven-point scale. The choices will generally range from Strongly Agree to Strongly Disagree so the survey maker can get a holistic view of people’s opinions. All Likert scales also include a mid-point (e.g neither agree nor disagree, for those who are neutral).

Likert scales are a valuable tool, but they come with their own set of challenges. Different respondents may interpret Likert scales differently, impacting the consistency of responses. This variance in interpretation can complicate data analysis and potentially lead to misleading conclusions.

Additionally, respondents often avoid selecting extreme responses, resulting in reduced variability in the data. Traditional Likert scales also lack a “don’t know” or “not applicable” option, which might force respondents into inaccurate choices.

Trade-offs and Forcing Choices

Avoid questions that lead to uniform results without providing any valuable insights. Forcing respondents to prioritize can reveal more meaningful data.

For example, instead of asking a generic question like, “How important is cost during a software evaluation?” which might result in a high percentage of respondents stating that it’s “very important,” consider asking, “What are the most important criteria during a software evaluation? Select up to two.” This approach offers more nuanced insights into the factors that truly matter to your audience.

Leading or Biased Questions

Last but not least, any form of leading or biased questions can distort the quality of data and hinder meaningful analysis. Leading questions impact survey results and affect the accuracy of how one might interpret the data.

Instead of posing questions that might sway respondents in a specific direction, ensure your questions maintain clear, concise, and unbiased language to attain reliable results. A common example of this issue arises when a timeframe is not clearly defined. For instance, asking, “What purchases have you made recently?” as opposed to “What purchases have you made in the last 6 months” removes any bias from the question and ensures a clear and accurate response.

B2B Quantitative Research: Avoid Asking the Wrong Questions

“It is not enough to ask the right questions. You must also avoid asking the wrong ones.” – Anne Bishop

The right survey questions serve as a catalyst, delivering valuable data in a B2B quantitative study. To ensure the purity of that data, however, it’s crucial to deliberately omit challenging questions that will introduce unnecessary complexity when you get to the analysis phase.

Obscure and complicated data will compromise the entire foundation of your research and the subsequent business decisions. So if you want assurance that your next quant project will yield you trustworthy results, give us a call. With 16 years of experience in conducting research for B2B tech, we possess the expertise to design a survey that instills confidence in your outcomes.

Cascade Insights is a hybrid market research and marketing firm that specializes in the B2B tech sector. For more than 16 years, we have conducted powerful B2B market research. To learn more, visit our B2B market research page.