Agentic AI is having a moment, but not the one many expected.

Touted as the next leap beyond chatbots and linear automation, Agentic AI systems promise to handle multi-step, context-rich workflows with minimal human input. These “autonomous agents” aren’t just executing tasks; they’re reasoning, making decisions, and adapting as they go.

But despite the buzz, adoption is stalling. Recent studies show that many Agentic AI initiatives are slipping into the trough of disillusionment, the phase where grand promises hit the wall of real-world complexity.

To succeed, Agentic AI vendors must look beyond technical capability and toward deep, human-centered understanding. They need to know how work actually gets done, not just how it’s documented.

That’s where Agentic AI market research comes in. It reveals the hidden patterns, pain points, and human decision-making that can make or break an intelligent agent. And in a landscape flooded with overhyped tools, this kind of insight is what separates enduring innovation from short-lived experiments.

The Adoption Gap: Why Agentic AI Is Struggling to Gain Traction

AI-driven workflow tools like Zapier, Make.com, and Lindy, along with basic LLM-powered chatbots, have already crossed the chasm into mainstream adoption. These tools thrive because they deliver clear, narrow value: automating predictable, rule-based tasks with minimal risk or disruption.

LLM-powered chatbots excel at handling natural language tasks that follow clear intent and structure, like answering FAQs, summarizing content, or generating draft responses. Similarly, platforms like Zapier and Make.com follow user-defined scripts to connect apps and move data across systems. Their success lies in the fact that they don’t attempt to outthink the user; they simply execute predefined instructions.

Agentic AI systems, on the other hand, represent a much bigger leap. Solutions like Manus, Auto-GPT, and various LangChain agents are designed to handle multi-step, context-rich workflows with limited human intervention. These tools promise to not only complete tasks but also reason through decisions, manage shifting objectives, and adapt their behavior as new information emerges.

Despite the buzz, Agentic AI has not yet achieved the same adoption traction; just 2% of organizations have deployed agentic AI at scale, and fewer than a quarter have even completed pilot programs.

Where Agentic AI Breaks Down in the Real World

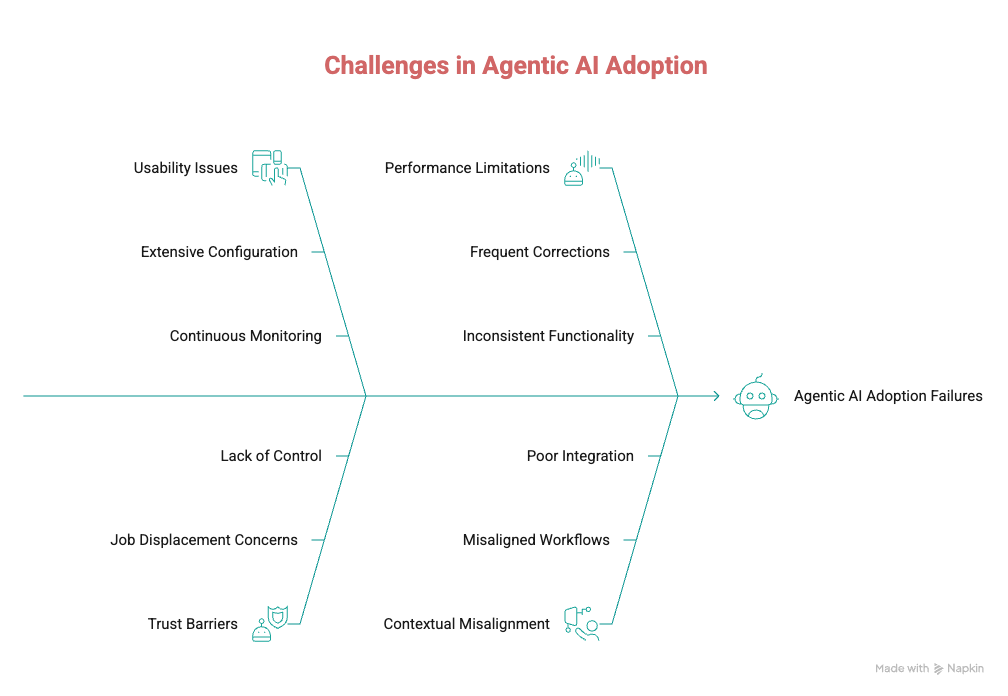

So what’s holding Agentic AI back? Most deployments remain experimental, with early enthusiasm often stalling due to usability issues, trust barriers, and performance limitations in real environments.

Many Agentic AI systems promise to reduce manual effort, but the reality often feels more like “re-orchestration” than true automation. While these tools aim to free users from managing tasks, they frequently demand extensive configuration, constant supervision, and frequent corrections just to stay on track.

Furthermore, some solutions position themselves as complete replacements for human roles rather than supportive co-pilots. This framing often triggers concerns about job displacement and a loss of control, leading to user resistance instead of embrace. And when the solution fails to deliver, it’s not just a product misstep; it becomes a much heavier burden for the teams and leaders who championed the investment in Agentic AI.

These breakdowns, both in adoption and organizational trust, often point to something deeper than poor execution. They stem from a more fundamental issue: contextual misalignment. These systems frequently falter not because they lack capability, but because they’re misaligned with the way people actually work.

Idealized Workflows vs. Real Workflows

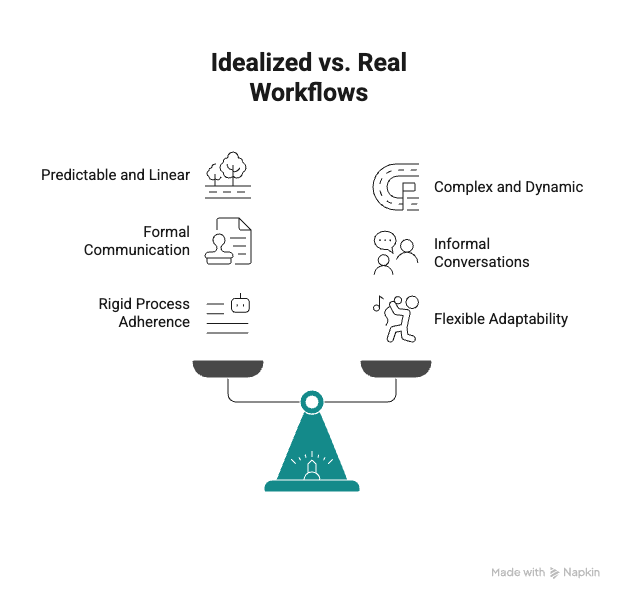

Agentic AI tends to operate on idealized process maps: step-by-step diagrams where everything flows logically. But in practice, workflows are full of:

- Informal conversations

- Tacit shortcuts

- Unspoken social cues

- Exceptions that fall outside the documented process

When agents ignore these fuzzy elements, they underperform – even when executing their programmed instructions perfectly.

Real-World Examples of Agentic AI Misalignment

Missed Human Nuance in Customer Onboarding

Consider this example: A midsize SaaS company deployed an Agentic AI system to manage customer onboarding. The agent flawlessly handled document collection, account provisioning, and automated welcome emails. However, it completely missed the parts of the process that were crucial for the human experience, like Slack check-ins, one-on-one calls with onboarding buddies, and impromptu expectation-setting meetings between managers and new hires.

The result? Confusion, missed context, and extra work for human staff who had to fill in the gaps. The agent didn’t fail at automation; it failed at empathy.

Marketing Campaign Management Without Real-Time Context

Imagine a marketing team piloted an agent to manage campaign requests and workflows. On paper, the system accurately tracked project stages, deadlines, and dependencies. But in practice, campaign priorities were often reshuffled informally—during hallway conversations, Slack DMs, or executive pivots that were never updated in the project management tool.

The agent, unaware of these crucial human signals, continued to prioritize tasks based on outdated inputs. This mismatch led to misaligned deliverables, stakeholder frustration, and eventually, the agent being shelved. It did what it was told, but not what was actually needed.

Resume Screening That Ignores What Really Matters

Even tasks that seem straightforward, like reviewing resumes for a job posting, can fall apart under the weight of real-world nuance. An Agentic AI system might be trained to match keywords, titles, or experience levels, but it can easily overlook informal hiring criteria: the hiring manager’s preference for culture fit, past successes with unconventional candidates, or the nuance behind a “nontraditional” career path. As a result, qualified candidates get screened out, and hiring teams spend more time undoing the agent’s decisions than moving forward with the process. The agent didn’t fail at filtering, but rather at understanding what actually matters to the team.

These failures aren’t edge cases; they’re the norm. In most organizations, the “official” process only tells part of the story. What’s missing are:

- Workarounds that individuals rely on to move faster

- Informal checkpoints that ensure alignment

- Emotional intelligence cues like urgency, tone, and hesitation

- Exceptions and edge cases that are handled manually because they’re too weird to document

Agentic AI systems can’t operate effectively if they don’t account for these invisible dynamics. And you can’t capture them just by analyzing a process map – you have to talk to the people doing the work.

Agentic AI Market Research: Strategies that Drive User Adoption

What Agentic AI vendors need isn’t just smarter models or more features. They need clarity about:

- What users are actually trying to accomplish

- Which parts of a workflow are ripe for automation, and which are off-limits

- What language builds trust, and what triggers fear

This is where strategic B2B market research becomes a critical differentiator. Research methods like Jobs-to-be-Done (JTBD) interviews, buyer persona development, message testing, and competitive analysis equip vendors with the human insight needed to build, position, and scale agentic systems successfully.

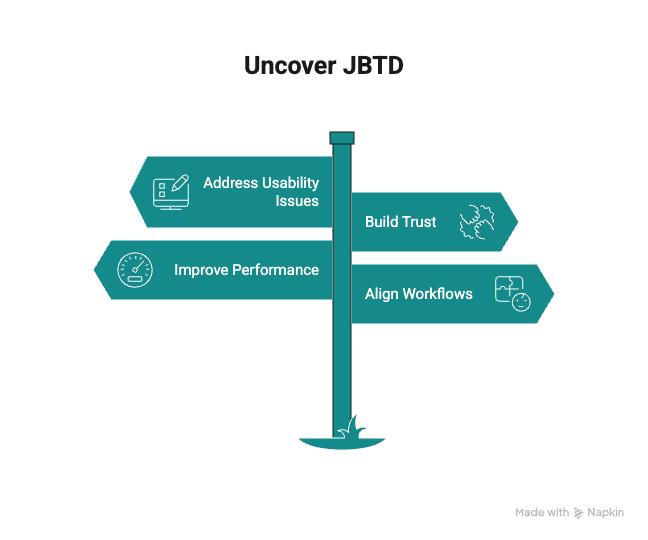

Jobs-to-be-Done (JTBD) Research: Uncovering Core Needs

JTBD research moves beyond superficial features to map the actual user goals, underlying motivations, emotional drivers, and functional constraints around a specific “job” they need done.

Why it works: Helps identify what problem users are truly trying to solve, allowing Agentic AI to be designed as a solution to that core need, not just a technological marvel.

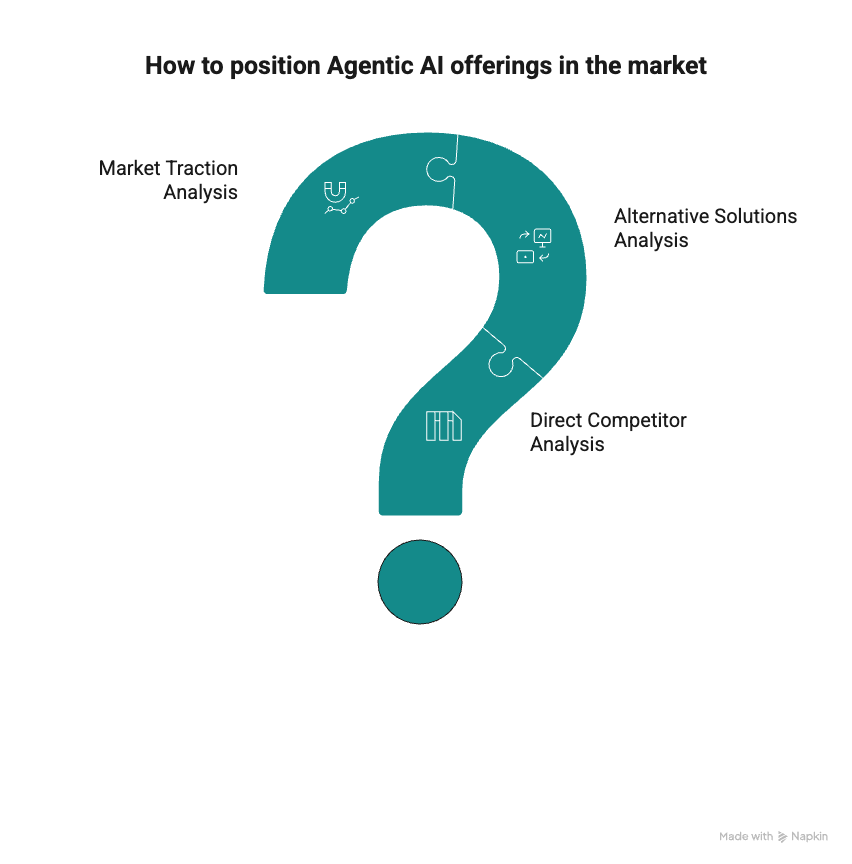

Competitive and Alternative Analysis: Positioning for Success

Uncover how competitors (both direct Agentic AI players and alternative solutions) are positioning their offerings. Analyze their value propositions, strengths, weaknesses, and which models (e.g., fully autonomous vs. human-in-the-loop) are gaining traction in specific markets.

Why it works: Identifies opportunities for differentiation, helps refine the unique selling proposition (USP), and clarifies the competitive landscape (e.g., Agentic AI vs. RPA vs. task-based LLM tools).

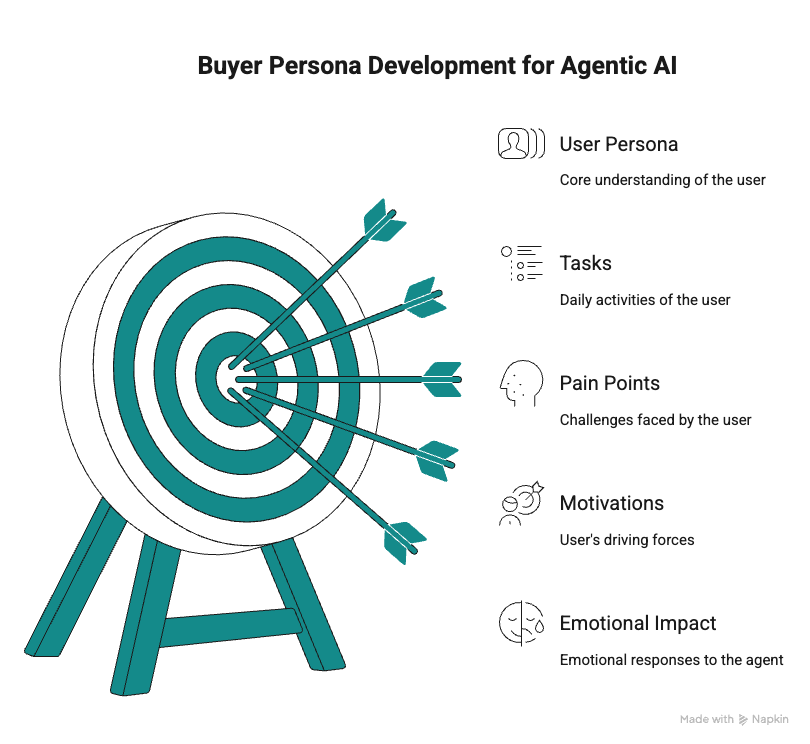

Buyer Persona Development: Who Is This Agent Helping?

Develop detailed user personas that identify who the agent is actually helping, their day-to-day tasks, pain points, motivations, and how their needs differ across verticals or functions. IDIs with operational leads, power users, workflow owners, and even those resistant to automation will uncover the human processes, hidden complexities, and emotional responses the agent aims to impact.

Why it works: Ensures the Agentic AI is designed with the actual user in mind, leading to higher adoption and satisfaction.

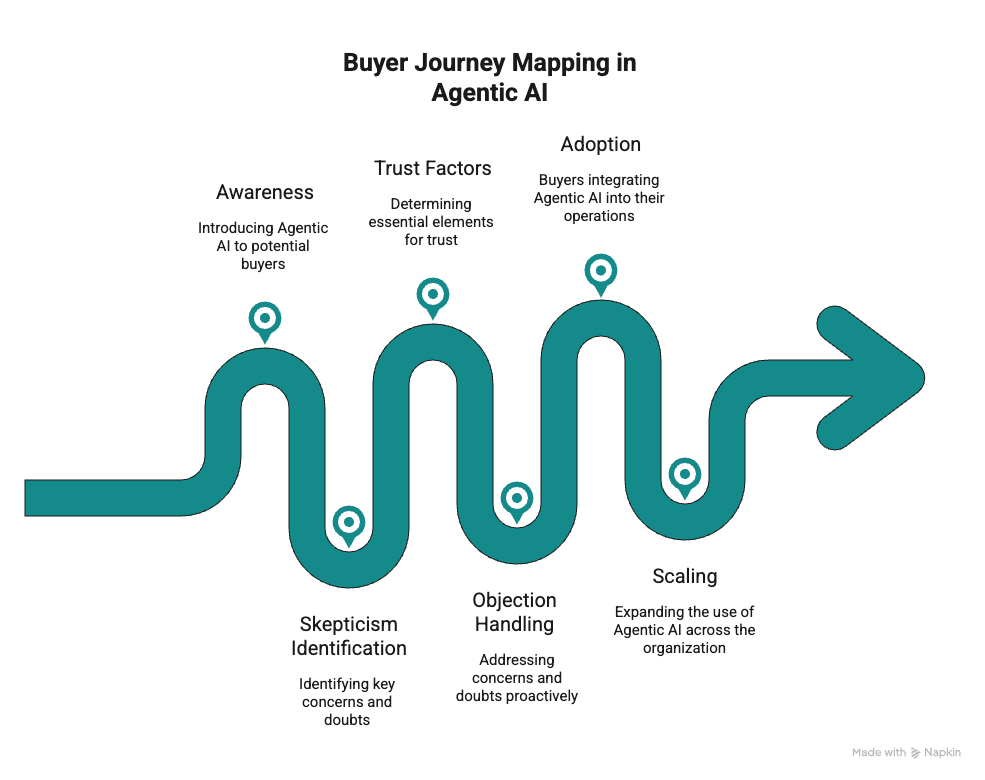

Buyer Journey Mapping: Addressing Skepticism and Building Trust

Map the entire buyer journey for Agentic AI, from awareness to adoption and scaling. Identify key skepticism points, potential blockers (e.g., security concerns, integration challenges, fear of job loss), and the trust factors essential for conversion.

Why it works: Helps vendors proactively address objections, build robust trust, and provide the right information at each stage of the decision-making process.

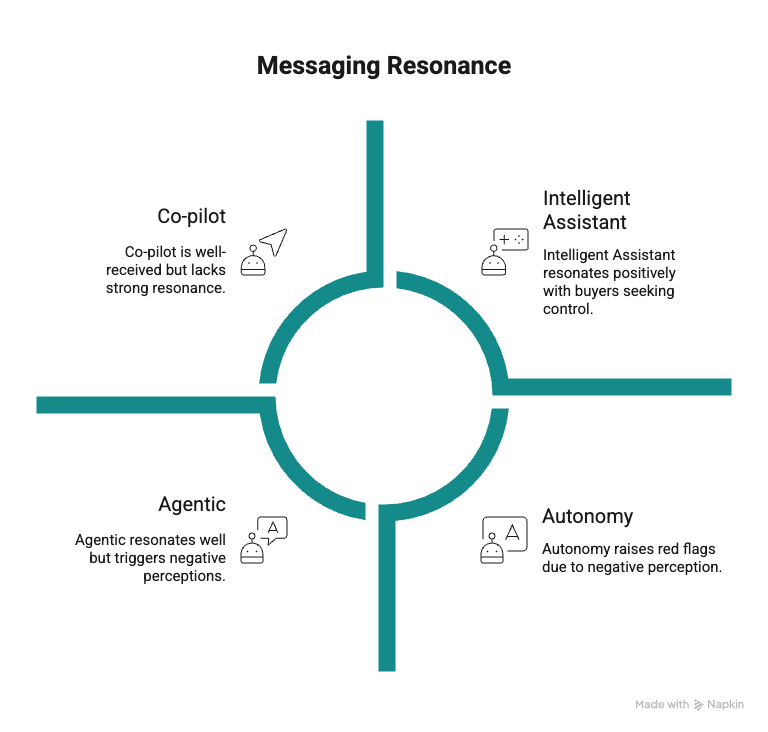

Messaging Research: Finding the Resonant Language

Test different messaging frameworks to determine whether terms like “autonomy” and “agentic” resonate positively or raise red flags. Explore alternative terminology such as “agent,” “co-pilot,” “workflow optimizer,” or “intelligent assistant” to find what best aligns with buyer desires for augmentation and control.

Why it works: Crafts compelling narratives that speak to buyer needs and build confidence, avoiding language that triggers fear or misunderstanding.

Agentic AI Market Research: Real Adoption Starts with Real Understanding

“We don’t need AI that replaces people. We need AI that understands people.”

– Fei-Fei Li, Co-Director, Stanford Human-Centered AI Institute

Agentic AI won’t scale simply because it’s powerful. It will scale when it becomes trusted, useful, and aligned with how people actually work.

That kind of alignment can’t be backfilled through ambition or technical brilliance alone. It starts with a deep, structured understanding of the real world—of workflows, behaviors, motivations, fears, and the messy constraints that define daily work.

In short: it starts with research.

If you’re committed to building agentic AI that people trust and adopt, ask yourself: Have you spoken directly with the people doing the work you’re trying to automate? Not just executives or technical stakeholders, but the individuals doing the work, in the flow, every single day.

If you can’t confidently answer “yes,” give us a call. With nearly two decades of B2B tech research experience, we help Agentic AI teams uncover the context their tools are missing—and design for real adoption, not just impressive automation.

This blog post is brought to you by Cascade Insights®, a firm that provides market research & marketing services exclusively to organizations with B2B tech sector initiatives. If you need a specialist to address your specific needs, check out our B2B Market Research Services.