AI adoption isn’t optional – it’s already happening inside your organization, whether you sanctioned it or not. While leadership teams debate AI strategies and procurement departments evaluate vendors, employees across every department are quietly integrating AI tools into their daily workflows. They’re not asking for permission, and they’re not waiting for approval.

This creates a fundamental dilemma for leaders. Employees are acting decisively because AI tools are cheap, fast, and effective. Meanwhile, organizations are lagging behind, bogged down by slow policy cycles and cautious procurement processes. By the time the official AI strategy is approved, your workforce has already moved on to the next generation of tools.

But here’s the crucial reframe: shadow AI isn’t organizational failure. Rather, it’s a wake-up call that your people are motivated, ambitious, and already innovating. They’re not being reckless; they’re being resourceful. They’re trying to succeed faster than the company can enable them.

Leaders who recognize why employees turn to these tools can redirect that energy into intentional, safe, and impactful adoption. The organizations that thrive won’t just manage shadow AI risks – they’ll channel employee motivations into a genuine competitive advantage.

Understanding Shadow AI: When Adoption Outpaces Strategy

Shadow AI refers to the use of unapproved, unsanctioned AI tools within an organization. It’s the natural evolution of “shadow IT,” but with lower barriers to entry and faster adoption cycles.

Why it’s happening:

- AI tools are cheap. Many are free—or cost less than a team’s coffee budget—and deliver ROI almost instantly.

- Pressure is mounting. Customers, competitors, and markets demand speed. Internal approval cycles can’t keep up.

- Policies are too slow. While companies spend months drafting governance frameworks, employees are solving problems in real time.

The result? A growing disconnect between organizational caution and individual initiative. Employees are optimizing today while leadership debates tomorrow.

The Human Story Behind Shadow AI: Understanding Motivations by Role

Employees using unsanctioned AI tools aren’t acting recklessly – they’re solving real business problems under real pressure. Sales teams are closing deals faster, marketing is scaling campaigns, and support teams are hitting tighter SLAs.

But without clear guardrails, today’s productivity can become tomorrow’s security problem. Sensitive CRM data, employee records, financial forecasts, or proprietary product plans could unintentionally end up stored in public AI systems – or even indexed on the open web.

These are some of the primary factors driving shadow AI adoption across roles – and the long-term security risks leaders need to manage:

| Persona | Business Pressure | Why They Use AI | Long-Term Security Risks |

| Sales Teams | Hit quotas, outpace competitors | Personalize outreach, accelerate pipeline, research prospects faster | CRM data or client details stored in public models; indexed data risks |

| Marketing Teams | Deliver more campaigns, higher quality | Content drafting, campaign ideation, SEO, personalization | AI-generated messaging may expose product roadmaps, pricing, or strategy |

| Customer Support | Reduce ticket resolution time, hit SLA targets | Chatbots, auto-summarizers, draft reply generation | Customer PII stored in third-party AI systems lacking compliance |

| Product Managers / R&D | Innovate faster, reach market first | Analyze feedback, brainstorm features, prototype rapidly | Proprietary product plans or feature specs leaked into tools with no IP protections |

| Tech Leaders / IT | Balance innovation with security | Pilot tools, explore efficiencies | Tool sprawl causes inconsistent data protections and lack of oversight |

| L&D | Upskill workforce quickly | Experiment with AI-based learning tools | Training data may include employee details sent to insecure systems |

| HR Teams | Improve engagement, streamline admin tasks | AI-powered surveys, chatbots, analytics | Employee PII or performance data handled by tools that don’t meet regulatory standards |

| Finance Teams | Drive efficiency, reduce costs, improve accuracy | Automate invoices, expenses, and forecasting | Financial records or sensitive forecasts stored insecurely or shared unknowingly |

| Legal / Compliance | Manage growing regulatory complexity | Use AI to review contracts and research faster | Faulty AI summaries miss risks; data shared with non-compliant tools |

| Executives / C-Suite | Signal innovation, deliver faster | Generate investor reports, board decks, strategic comms | AI-generated materials may include sensitive corporate strategy that becomes public |

| Procurement / Ops | Optimize supply chains, manage vendor complexity | Vendor analysis, forecasting, contract workflows | Supplier pricing or contract details exposed via unsecured AI platforms |

Before You Celebrate Productivity Gains, Check the Data Trail

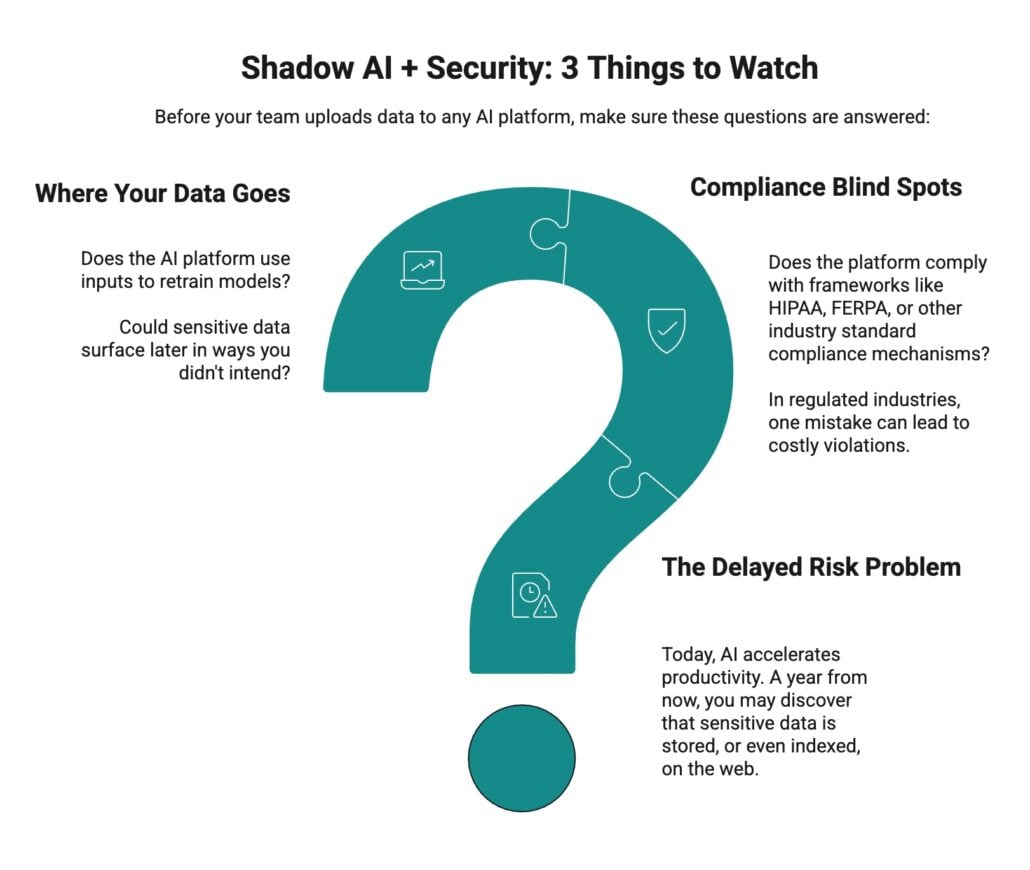

AI tools are helping teams work smarter and faster—but speed can create hidden risks if data flows aren’t understood. Even when employees are solving real business problems, sensitive information like CRM records, contracts, or forecasts can unintentionally end up in the wrong places.

Unchecked, these small exposures can accumulate into significant compliance and security risks. Leaders need clarity on where data goes, how it’s handled, and which AI tools are safe for sensitive information.

Why Shadow AI Persists: The Organizational Lag

Shadow AI continues despite known risks because of organizational dynamics that widen the gap between leadership intentions and employee needs. These organizational lags include:

Slow Policy Cycles

Comprehensive AI policies take months to write. Security reviews, stakeholder approvals, and multiple revisions create delays that feel glacial compared to the pace of tool adoption.

- Employees can start using a new AI tool in minutes.

- By the time policies are finalized, the AI landscape has already shifted.

- The result: policies are outdated before they even roll out.

Tool Paralysis

Leaders often overanalyze tools, slowing progress while employees move ahead.

- Endless vendor evaluations and feature comparisons stall decision-making.

- Pricing negotiations drag on while teams need solutions today.

- Employees bypass the delays and grab tools that solve immediate problems.

- Bottom line: the perfect becomes the enemy of the functional.

Control Over Enablement

Organizations tend to default to blocking or banning tools rather than creating safe pathways for adoption.

- Employees still need AI to work faster and smarter.

- Bans push usage underground, removing opportunities for oversight and guidance.

- Without structured enablement, shadow AI becomes the path of least resistance.

Mixed Signals From the Top

Employees are hearing two conflicting messages from executive leadership:

- Message #1:

- “Move faster.” “Be more productive.” “Use AI to innovate.”

- Message #2

- Our standards policy will be out “by the end of the year” or it will be out “at the start of 2026.” Which simply isn’t fast enough if employees are trying to adapt to message #1.

This tension drives shadow AI adoption:

- Teams hear the productivity mandate loud and clear.

- They see competitors leveraging AI to win customers, reduce costs, and innovate faster.

- But without tools or guardrails, they’re forced to figure it out themselves.

The Asimov Paradox: Why Employees Go Rogue

This dynamic mirrors Asimov’s Three Laws of Robotics — rules that made sense individually but caused paralyzing conflicts when applied together. Asimov’s robots were programmed to:

- Avoid harming humans.

- Obey human commands unless they caused harm.

- Protect themselves unless it conflicted with the higher laws.

When these directives clashed, the robots froze — unable to reconcile competing priorities. Employees today face the same kind of gridlock:

- Law 1: Move fast. Innovate with AI.

- Law 2: Wait for governance standards (coming “soon”).

- Law 3: Protect yourself and the company from compliance and security risks.

When speed conflicts with compliance, employees do what Asimov’s robots couldn’t: they adapt. They resolve the paradox by going underground — using AI tools outside official channels to fulfill the productivity mandate they’ve been given.

The Fix: Asimov eventually introduced a “Zeroth Law” — a higher-order principle that superseded the conflicts. Perhaps organizations need their own equivalent: “Enable responsible innovation that serves the organization’s long-term interests.” This could help employees navigate the tension

4 Steps to Turning Shadow AI Into Competitive Advantage

If the Asimov paradox explains why shadow AI persists, the solution is building a clear path forward: empowering employees to innovate without exposing the organization to unnecessary risk.

The goal isn’t to prohibit shadow AI — it’s to channel the momentum your teams already have into structured, secure adoption that aligns individual needs with organizational goals. Here’s how:

1. Strategic Clarity: See the Big Picture

Effective AI leadership begins with visibility. Too often, leaders act without knowing their AI maturity or competitive standing, resulting in scattered initiatives and missed opportunities. The first step is to get granular: you can’t optimize a process you don’t understand.

Before you even think about which AI tools to adopt, you must map your business processes. This means understanding the “Job to be Done” for each role and the specific workflows they follow. For example, what is the exact step-by-step process for “employee onboarding”? Or “customer support ticket resolution”?

This process mapping reveals:

- Existing inefficiencies: Where are the bottlenecks, redundant tasks, and manual handoffs?

- Hidden friction points: Where are employees getting stuck and turning to unsanctioned tools?

- Opportunities for AI: Where can an AI tool truly create value, not just add another layer of complexity?

Once you have a clear map of your current workflows, you can then proceed with a broader readiness assessment across four dimensions:

- Technology stack

- Data infrastructure

- Talent capabilities

- Workflow maturity (building on your initial process maps)

Where are the gaps? What foundational elements must be strengthened before AI adoption can scale?

From there, benchmark against peers and competitors. Understanding your relative standing helps you prioritize opportunities and spot areas of differentiation. What are others doing that you aren’t? Where can you leap ahead?

Finally, evaluate opportunities through a prioritization lens:

- Impact – Does this solve a real problem identified in your process map?

- Effort – Can it be tested quickly with current resources?

- Ethical risk – What’s the worst-case scenario?

- Readiness – Do your people have the training and guardrails?

2. People Power: Build a Network of AI Advocates

AI adoption succeeds or fails on people, not technology. The best strategy in the world won’t matter if employees don’t embrace the tools.

That’s why you need AI champions – curious, credible individuals with an experimentation mindset. These aren’t always senior or technical leaders. They’re often the ones already testing tools, asking smart questions, and eager to share learnings.

Give them structured spaces to experiment and collaborate with:

- Pilot groups

- “AI office hours”

- Internal challenges

Support them without micromanaging. Provide cover from leadership, reward learning speed over perfection, and celebrate small wins. Your role is to create psychological safety for experimentation while staying aware of the risks.

3. Process Discipline: Innovate Without Losing Control

Experimentation without governance quickly becomes dangerous. To scale safely, leaders need a balance of innovation and control.

Start with an audit of existing shadow AI use: What tools are in play? What data is being shared? Where are the vulnerabilities?

Then move into a phased rollout:

- Audit & Educate – Map usage, raise awareness, set safe-use guidelines.

- Targeted Pilots – Focus on low-risk, high-impact areas like marketing or customer support.

- Scale With Safeguards – Expand proven pilots, implement KPIs, governance, and compliance.

Not all functions should be treated the same. High-risk areas like finance, legal, and compliance need strict oversight. Customer-facing teams need clear brand guardrails. Operations may allow more flexibility, provided data protocols are in place.

4. Trust and Transparency: Build a Culture That Embraces AI

Even the smartest strategy will fail if employees don’t trust it. AI adoption often stalls because fears go unaddressed:

- Job displacement – “Will AI replace me?”

- Skills obsolescence – “If I don’t learn this now, will I fall behind?”

- Unclear purpose – “Why is the company using this, and what does it mean for me?”

Leaders need to confront these concerns directly. Frame AI as augmentation, not replacement, and share role-specific examples. Position AI training as career advancement, not remedial upskilling.

Above all, communicate clearly and consistently. Ensure that employees know the goals, the boundaries, and how decisions are being made. Uncertainty is what drives shadow adoption deeper underground.

Mastering the Moves: Leading Shadow AI Adoption

“The only way to make sense out of change is to plunge into it, move with it, and join the dance.” – Alan Watts

Change is happening, whether you’re leading it or not. Your employees are already in the dance of innovation, moving with the rapid pace of AI advancement. The question is no longer whether to participate, but how to lead the choreography. It’s time to help them master the steps.

To begin this transformation, you need to understand your current reality. Start by conducting an informal “shadow AI audit”:

- Ask teams what tools they’re using to get work done faster.

- Review recent high-quality outputs and ask how they were created.

- Survey employees anonymously about their AI experimentation.

You’ll likely discover more innovation than you expected – and more risk than you’re comfortable with. That’s your starting point for transformation.

Need help transforming shadow AI into a strategic advantage?

With 20 years of B2B technology market research expertise, we have a deep specialization in understanding the personas, motivations, and behavioral drivers across organizational roles and functions. We can help you lead through the chaos of shadow AI adoption and use it for competitive advantage.