Market research in 2026 feels less like a steady march forward and more like navigating a coastline in a heavy fog. You can hear the engines of GenAI humming, but you can’t quite tell if you are steering toward open water or jagged rocks. In Part 1 of this series, we mapped the forces pushing AI from a laboratory experiment to a daily expectation. That exploration made one truth undeniable: the industry has reached a delta where the path splits in a dozen directions.

The natural instinct is to guess which channel is the “right” one and bet the entire ship on that single heading. However, in an era of agentic workflows, synthetic users, and rapidly shifting trust, the traditional urge to predict is a trap. It creates false confidence, like following navigation charts that haven’t been updated for the current conditions.

Success in this environment requires a navigation system built for uncertainty itself. This is where we turn to scenario planning.

Why Scenario Planning Works When Prediction Fails

Here, we’re using the exploratory approach of scenario planning developed by Thomas J. Chermack in Scenario Planning in Organizations and practiced by firms like Chermack Scenarios.

Traditional strategic planning asks: “What’s most likely to happen?” It locks you into one forecast, often too early, with too much confidence. Exploratory scenario planning asks different questions:

- “What could happen?”

- “How would we need to operate in each case?”

- “Which capabilities keep us competitive across multiple futures?”

Scenario planning doesn’t predict the future. It prepares you for it. You stress-test decisions against multiple plausible outcomes before committing resources, so you build capabilities that remain valuable no matter which future unfolds. You stop drifting and start steering.

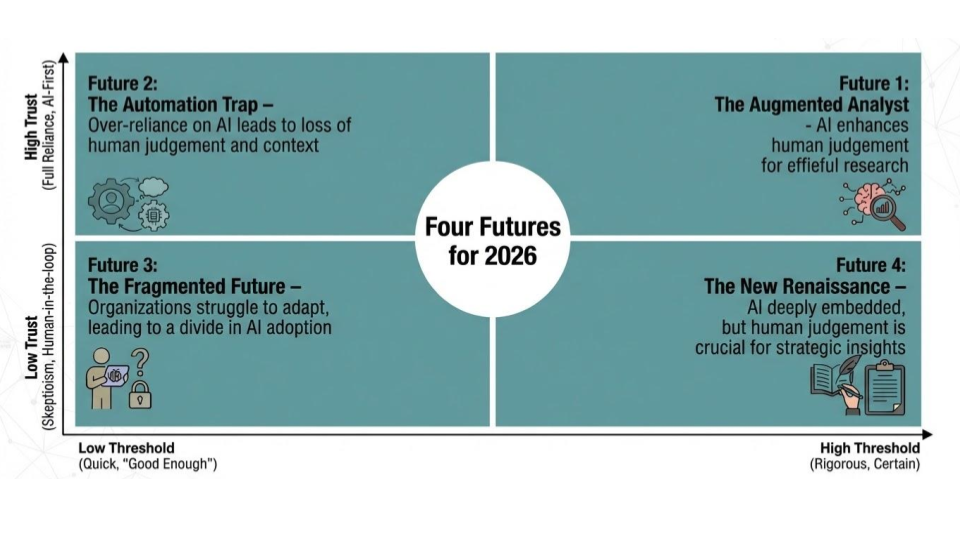

Two Uncertainties That Will Define 2026

The future isn’t a single destination, but rather a range of possible courses shaped by how key uncertainties resolve. Through extensive conversations with clients, analysts, and research leaders, two critical questions keep surfacing:

- “How much will we trust AI-generated insights to drive real decisions?”

- “How rigorous does research need to be before we’re willing to act on it?”

How your organization answers these questions through policy, investment, and practice will determine which of four distinct futures you navigate toward by late 2026.

A note on customization: If different uncertainties feel more urgent for your organization, like regulatory changes, talent retention, or client concentration, the same exploratory framework applies. In Part 3, we’ll show you how to identify your own critical uncertainties and build scenarios tailored to your specific context. The methodology is universal; the variables are yours to define.

Uncertainty #1: Trust in AI-Derived Outputs

How much will organizations trust AI-derived insights to inform real decisions?

This uncertainty isn’t about whether AI will be used. That’s already happening. It’s about whether insights generated largely by models themselves (prompt-based syntheses, LLM answers, synthetic outputs) will be trusted enough to act on without extensive human validation.

Why this remains uncertain:

- Validation and transparency standards are still emerging

- Liability and accountability concerns remain unresolved

- Tolerance for risk varies by decision type and consequence

- Early failures can quickly erode confidence

What this means for insights professionals:

Low trust positions researchers as validators and interpreters. High trust shifts the role toward designing prompts, curating data, and overseeing AI-driven workflows.

What this means for stakeholders:

Low trust slows delivery but increases confidence. High trust delivers speed and cost efficiency, with greater acceptance of probabilistic and revisable insights.

Low trust: AI outputs treated as exploratory inputs

High trust: AI-derived insight accepted as decision-ready

Uncertainty #2: The Threshold for “Good Enough” Research

What level of rigor and certainty will organizations require before acting on insight?

As GenAI reduces the time and cost to produce insight-like outputs, teams are re-evaluating what qualifies as sufficient evidence for decision-making. The unresolved question: will speed-driven organizations accept directionally useful insight, or will high-stakes decisions continue to demand deep, human-led research?

Why this remains uncertain:

- Speed and cost pressures are intensifying

- Competitive dynamics reward faster movers

- Poor decisions can reinforce demand for depth

- Organizations vary in their ability to assess insight quality

What this means for insights professionals:

A low threshold favors rapid sense-making and iteration. A high threshold elevates researchers into strategic designers of rigorous, defensible studies.

What this means for stakeholders:

A low threshold enables faster action with higher risk. A high threshold delivers stronger conviction at the cost of time and investment.

Low threshold: Directionally useful insight is enough

High threshold: Deep, validated understanding is required

From Uncertainty to Four Plausible Futures

Plotting these two uncertainties together creates a 2×2 framework that yields four plausible futures for market research in 2026. Each future reflects a different combination of trust in AI-derived outputs and tolerance for “good enough” insight.

These futures are not predictions. They are navigation tools. They’re structured thought experiments designed to help you stress-test today’s decisions against multiple possible outcomes and clarify what capabilities matter most under different conditions.

The four futures that follow explore what each of these worlds could look like for research teams, clients, and individual researchers.

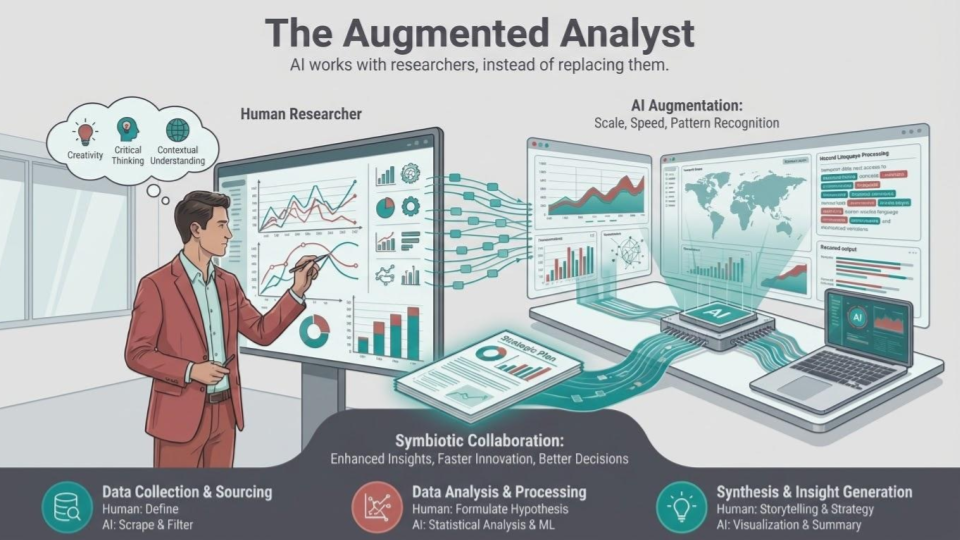

Scenario 1: The Augmented Analyst

In this future, GenAI is everywhere but never unchecked. Teams adopt it where it clearly accelerates work and deliberately avoid using it where judgment, context, and accountability matter most. Research is faster, but it is also more careful. The role of the researcher is not reduced. It is clarified.

A day in the life:

Sarah, a senior insights manager, starts her Monday reviewing tracker data that has already been cleaned, normalized, and flagged by AI for anomalies. Instead of scanning spreadsheets, she focuses on deciding which signals deserve attention and which are noise.

By Tuesday, she’s preparing for qualitative follow-ups. Some initial interviews are conducted by AI interviewers using a structured guide, allowing Sarah to quickly test hypotheses and identify themes at scale. She then refines the next round of probes, adding questions shaped by her knowledge of the category, the client’s internal dynamics, and what failed last quarter.

By Thursday, interviews are complete. Transcripts are processed. Draft themes are waiting. Sarah spends her time pressure-testing interpretations, debating implications with product and marketing leaders, and shaping a narrative that connects customer friction to concrete go-to-market decisions.

What once took six to eight weeks now takes two to three, not because corners were cut, but because execution was compressed and judgment was protected.

The dynamic:

- AI removes friction from the work

- Humans supply meaning

- The job becomes more interesting

- Research retains its strategic seat

The risk: Complacency. Teams that stop evolving may find themselves overtaken by organizations willing to rethink research more radically.

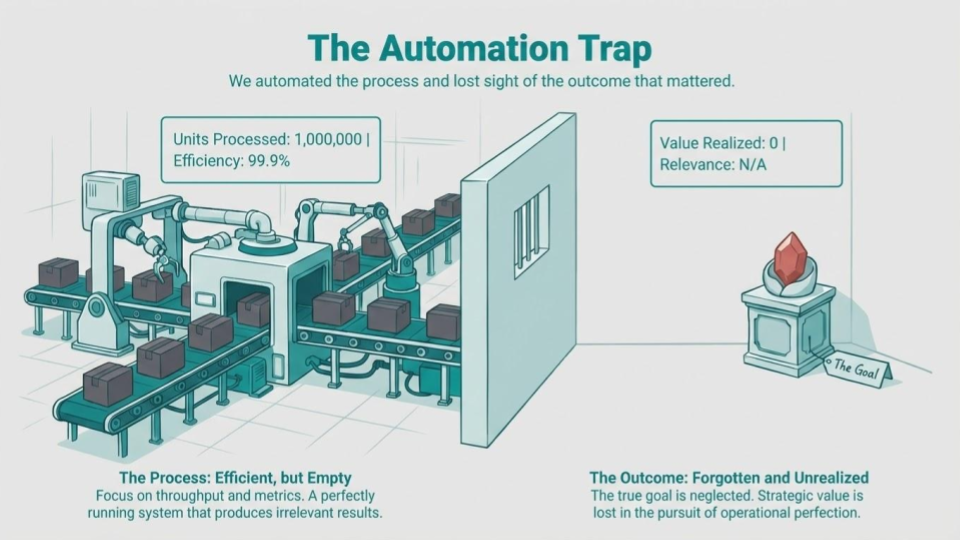

Scenario 2: The Automation Trap

This future begins with enthusiasm. A leadership team watches an AI platform generate a polished report in under an hour. The charts look sharp. The language sounds confident. The cost savings are impossible to ignore. Budgets shift quickly. Headcount quietly shrinks.

How it unravels:

A researcher logs in to review an AI-generated summary before sending it to stakeholders. There’s little time or incentive to challenge the output. Context is missing, but no one has budgeted time to add it back. The system flags trends, but it doesn’t know about:

- The competitor recall that skewed sentiment

- The internal reorg that changed buying behavior

- The pricing experiment that distorted baseline metrics

Those details live in people’s heads, and many of those people are gone.

The slow decline:

- Recommendations become vague and interchangeable

- Reports sound reasonable but rarely decisive

- Stakeholders stop asking follow-up questions

- Decision quality declines slowly, then visibly

- When outcomes disappoint, no one knows if the issue was the data, the model, or the decision itself

The exodus: The most experienced researchers leave first. Those who remain manage tools rather than shape thinking. Research becomes a box to check rather than a partner in strategy.

Why this is hard to reverse: Once trust erodes and tacit expertise walks out the door, rebuilding takes years. Expectations reset to fast and cheap, even when fast and cheap no longer delivers value.

Scenario 3: The New Research Renaissance

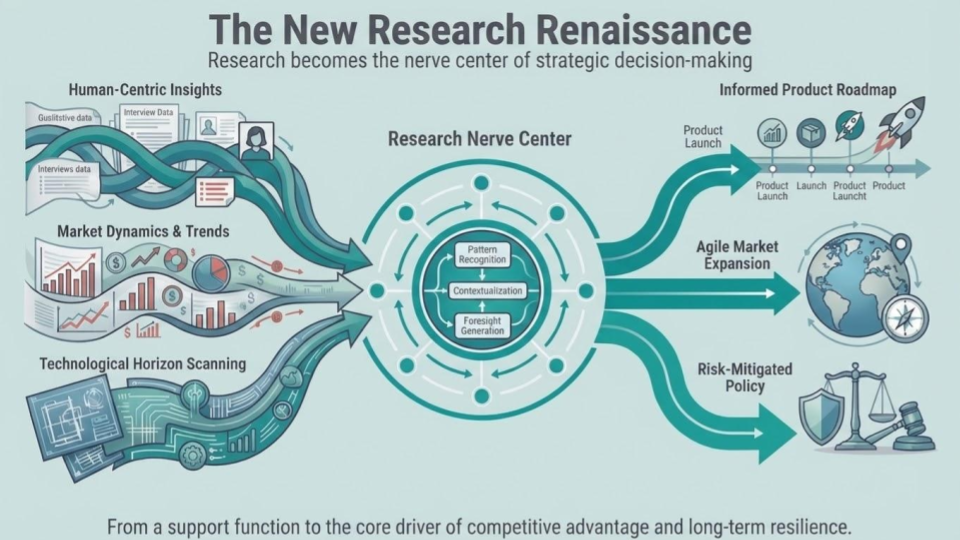

In this future, GenAI is deeply embedded, but so are researchers. Market research no longer operates as a series of episodic projects. It functions as a living insight system that continuously integrates quantitative data, qualitative input, behavioral signals, and market intelligence. AI connects the dots. Humans decide which dots matter.

A day in the life:

A product leader asks during a Tuesday standup: “Our enterprise churn is up 12% this quarter. Is this a pricing issue, a product gap, or something else?”

Instead of commissioning a two-month study, the insight platform immediately:

- Pulls three years of churn data segmented by customer profile

- Surfaces patterns from recent exit interviews and support tickets

- Identifies analogous situations from adjacent product lines

- Flags contradictory signals that need human interpretation

- Recommends three targeted interviews with at-risk accounts

Within 48 hours, researchers deliver a grounded answer validated by human judgment and enriched by AI speed. The team discovers it’s not pricing; it’s a gap in onboarding for a specific buyer persona that emerged post-acquisition.

How researchers spend their time:

- Designing the right questions, not just answering them

- Validating signals and stress-testing interpretations

- Translating patterns into strategy

- Socializing insights across product, marketing, and corporate strategy teams

Insight no longer arrives at the end of a project. It’s embedded directly into everyday decision workflows.

The business impact:

- Products land better

- Marketing becomes more precise

- Teams pivot earlier, before metrics drift

- Research becomes a defensible advantage (tools commoditize, but learning systems don’t)

The risk: Complexity. Systems can sprawl, and technology can outpace understanding. Teams must continually invest in people, process, and culture to keep the system healthy. When they do, research becomes indispensable.

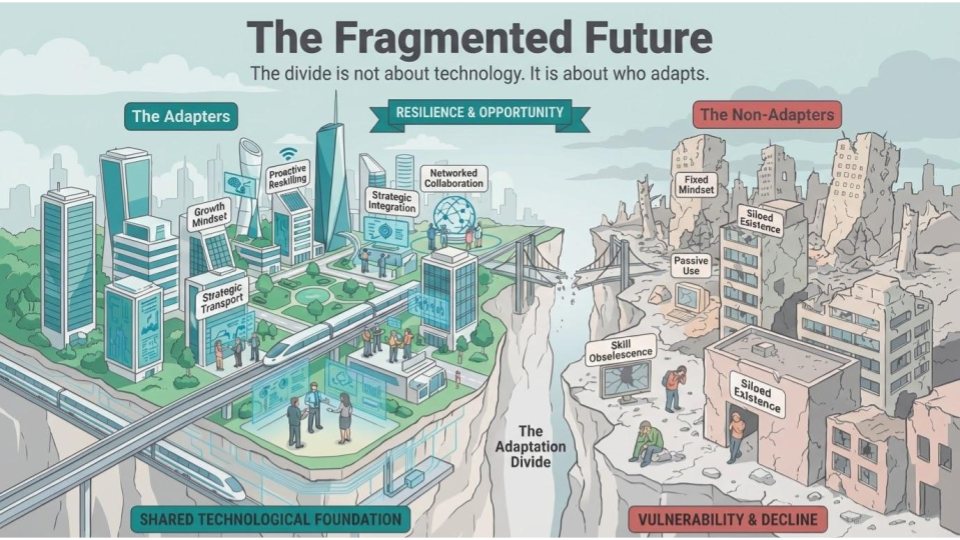

Scenario 4: The Fragmented Future

In this future, the industry does not move together. It splits. Some organizations redesign workflows, retrain talent, and integrate AI deeply. Others remain stuck in pilots, debates, and partial adoption. A shrinking middle struggles to serve both worlds.

The slow-moving organization:

A researcher spends much of her week managing workarounds:

- Tools are evaluated but never fully implemented

- Leadership remains cautious, but clients are not

- Turnaround times stretch

- Bids are lost to faster, more integrated competitors

- Talented colleagues leave, often publicly

- Recruiting becomes harder each quarter

The AI-native organization:

Meanwhile, AI-native teams compound their advantage. They move faster, learn faster, and position research as a strategic asset. The gap widens not because of access to technology, but because of willingness to change.

The remaining options:

Organizations in this future still have choices:

- Commit fully and move toward the Augmented Analyst or Research Renaissance paths

- Narrow focus and become boutique specialists

- Partner, merge, or be acquired

- Exit gracefully

What they cannot do is wait. In this future, indecision is a decision, and the cost of delay rises every quarter.

Why These Futures Matter (And Why You Can’t Afford to Ignore Them)

None of these futures is hypothetical. Elements of all four are already visible today, often within the same organization, and sometimes on the same team. What matters is recognizing which future you’re drifting toward, and whether that direction is intentional or accidental.

The trade-offs are real, and they affect everyone differently.

For business leaders: Some futures optimize for speed and cost at the expense of decision quality. Others demand greater upfront investment but create more durable strategic advantage. Are you building a system that delivers faster answers, or one that enables better decisions?

For researchers: Some paths expand influence, creativity, and professional growth. Others narrow the role to execution, with limited room for judgment or impact. Are you designing for human expertise to matter more, or less?

For clients and stakeholders: The difference shows up in trust. Do insights genuinely improve outcomes, or merely arrive faster and look polished? Are you buying speed, or certainty?

The risks are already visible. The Automation Trap promises efficiency and lower costs, but over time erodes trust, flattens insight, and strips away the context that makes research valuable. The Fragmented Future creates widening gaps like clients receive uneven experiences, top talent leaves, and organizations find themselves unable to compete on either speed or depth.

The Augmented Analyst and Research Renaissance futures demand more, like investment in people, governance, and change management. But they preserve what has always made research matter: judgment, context, and the ability to turn information into understanding. They don’t just make research faster. They make it more consequential.

Scenario planning exists to expose the consequences of today’s decisions before they quietly lock in tomorrow’s reality. Are we making deliberate choices, or drifting into a future we wouldn’t choose?

What Scenario Planning Reveals About Your Next Move

The cost of drift is higher than the cost of decision. When change accelerates, inaction can feel safe. In reality, it simply locks in default decisions about speed versus rigor, automation versus judgment, and the role research will play in the organization. Over time, those defaults harden into strategy, whether they were chosen deliberately or not.

The organizations that succeed won’t be the ones with the most confident predictions. They’ll be the ones that stay alert, maintain their heading, and intervene early enough to steer, before convenience starts masquerading as strategy.

Before moving on, take a moment to reflect:

- Which of the four futures feels closest to where you’re already heading?

- Where is trust in AI assumed rather than explicitly decided?

- Which decisions are being optimized for speed, and which truly require certainty?

- If nothing changes over the next 12 months, where do you realistically end up?

These questions are the real value of scenario planning. They make trade-offs visible and surface choices that are already being made quietly.

Coming Next: Building Your Own Scenario Plan

In Part 3, we’ll show you how to apply this framework inside your own organization. You’ll learn how to:

- Identify the uncertainties that matter most to you

- Map current practices against multiple plausible futures

- Make near-term decisions that hold up under uncertainty

Because the future of market research won’t be decided by GenAI alone. It will be shaped by how intentionally leaders choose to respond to it.